Navigating AI: Simplifying Generative AI and Machine Learning

In the ever-evolving landscape of artificial intelligence (AI), businesses are eager to harness its transformative potential. However, the options available and the hesitance in the face of the unknown to adopt new technologies have left many enterprises at a crossroad.

The brave new world of automation especially appears complex with terms like Machine Learning (ML), Generative AI and Large Language Models (LLMs), etc., floating around.

Ultimately, a strategic approach to technology adoption must ensure alignment with broader business objectives.

Two of the most important subsets of AI impacting (or potentially impacting) businesses are Generative AI and Machine Learning.

Let’s talk about two of the most popular options available for businesses to leverage today – Generative AI and Machine Learning (ML) – and explore their pros and cons in order to simplify them.

Read More: Why Your Business Should Not Fear AI

Understanding the choices: Generative AI vs Machine Learning

In the realm of AI, understanding the distinctions between Generative AI and Machine Learning is essential. While both play a pivotal role in digital transformation, they operate on different principles, each with its unique strengths.

What is Generative AI?

According to IBM, Generative AI refers to deep-learning models that can generate high-quality text, images, and other content based on the data they were trained on.

While the definition may appear limited in its scope at first glance, Generative AI can be used to achieve a lot more, including training conversational chatbots, conducting data analysis, and even discovering new molecules.

It particularly excels at generating data that wasn't explicitly provided during training.

That’s possible because Generative AI often involves the use of a Large Language Model (LLM). Databricks defines LLMs as AI systems that are designed to process and analyze vast amounts of natural language data and then use that information to generate responses to user prompts.

For instance, when asked ‘How is Generative AI related to LLMs?’, ChatGPT 3.5 says, “LLMs, such as OpenAI's GPT (Generative Pre-trained Transformer) series, are pre-trained on vast datasets containing diverse language patterns.”

Large language models are becoming increasingly important in a variety of applications, such as natural language processing, machine translation, code and text generation, and more.

While there is a nuanced difference between LLMs and Generative AI, for the purpose of this conversation, we may use the two terms interchangeably.

What is Machine Learning?

Machine Learning (ML) is a broader category within Artificial Intelligence that focuses on development of algorithms and models that enable computers to learn from data, often imitating the way humans learn.

ML systems learn patterns and make predictions based on the input data, improving in accuracy with continual use.

Taking it a step further, traditional ML can be further classified into supervised and unsupervised.

Supervised learning uses labeled data to train a model. What labeled data essentially means is that each data point or variable is mapped and labeled.

Unsupervised learning uses unlabeled data, essentially datasets that are without tags, categories, etc., to find patterns or clusters.

These capabilities essentially mean that ML is great at linear regression, decision trees, and more.

Pros and Cons: Generative AI and Machine Learning

As a business, technology adoption isn’t the easiest decision.

From strategic to technical feasibility and financial viability, everything comes into play. All of this while dealing with an inherent risk that you adopted a technology that doesn’t fit the bill.

It is for this reason that we offer GENESIS, by Launchcode, an extensive discovery product that answers all your doubts and questions about your objectives, business landscape, technology stacks and options (AI, ML, traditional programs, etc.) available, costs, timelines. However, we digress.

For now, it might help to start with essential pros and cons between Generative AI and Machine Learning. The aim here is to at least get the conversations started within your organization and simplify them.

Download PDF: Pros and Cons of Generative AI and Machine Learning

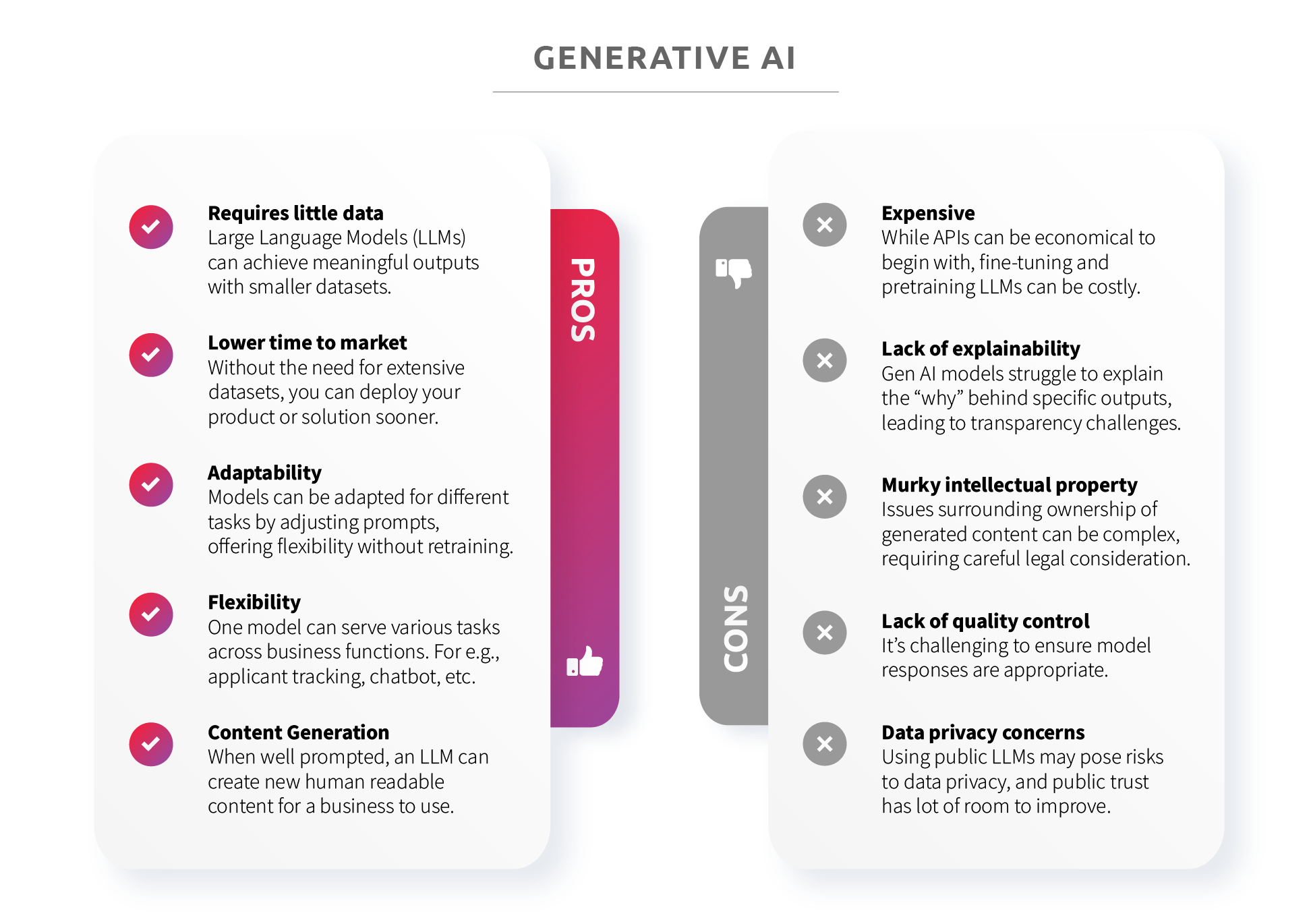

Generative AI: Pros and Cons

Pros

- Low Data Requirement: Generative AI stands out for its ability to produce meaningful results with less data.

- Lower Time to Market: With limited data requirement, it can reduce the time needed to get your product or solution to the market.

- Adaptability Through Adjustment: It also allows users to adjust prompts, allowing for fine-tuning of responses in real-time.

- Flexibility Across Tasks: A single Generative AI model can handle various tasks, showcasing flexibility. Like we mentioned earlier, it can be used to train conversational chatbots, conduct data analysis, and has even been used to discover new molecules.

- Content Generation: When prompted appropriately, an LLM can create new human readable content for a business to use.

Cons

- Cost of Fine-Tuning: Fine-tuning and pretraining large language models (LLMs) can incur significant costs.

- Lack of Explanation: Outputs from Generative AI models lacks the ability to pinpoint the ‘why’ behind a specific output, posing challenges.

- Intellectual Property Concerns: Issues surrounding ownership of generated content can be complex, requiring careful legal consideration.

- Quality Control: It is challenging to ensure model responses are appropriate.

- Data Privacy Concerns: Enterprises grapple with data privacy concerns from using public LLMs in Generative AI applications. Will the data be used to train the model further is one of the biggest questions organizations grapple with.

- Bias: Depending on your prompt, Generative AI typically offer responses that may feed into your own bias, and sometimes even ‘hallucinates’ facts.

Why should businesses use Generative AI?

LLMs work by first analyzing vast amounts of data and creating an internal structure that models the natural language data sets that they’re trained on. Once this internal structure has been developed, the models can then take input in the form of natural language and approximate a good response.

Language has been at the core of all forms of human and technological communications over millennia, providing the words, semantics and grammar needed to convey ideas and concepts. In the AI world, a language model serves a similar purpose, providing a basis to communicate and generate new concepts.

Organizations use Generative AI or LLMs for various purposes, depending on their requirements. Some of the most common usages are: chatbots and virtual assistants, code generation and debugging, sentiment analysis, information retrieval, image captioning, language-image translation, text classification and clustering, language translation, summarization and paraphrasing, visual question answering (VQA), product recommendation with visual cues, and automated visual content creation.

Generative AI: Summary

Yes, there are pros and cons, but a lot of the latter really depends on implementation.

Simply put, public LLMs, much like off-the-shelf software, want to be everything to everyone. That’s the reason that there are very specific use cases they excel at.

However, implemented right, with specific objectives and privacy in mind, like a lot of Launchcode’s custom-built technology solutions, it can work wonders with greater accuracy.

Explore Launchcode's Success Stories

And how do we do that?

There are two types of LLMs: proprietary and open-source.

Proprietary LLMs are owned by a company and can only be used by customers who purchase a license. The license may restrict how the LLM can be used. On the other hand, open-source LLMs are free and available for anyone to access, use for any purpose, modify and distribute, according to IBM.

In the case of open-source models, developers and researchers are free to use, improve or otherwise modify the model.

There is a growing emphasis on democratizing access to LLMs as they become increasingly sophisticated. “Open-source models, in particular, are playing a pivotal role in this democratization, offering researchers, developers and enthusiasts alike the opportunity to delve deep into their intricacies, fine-tune them for specific tasks, or even build upon their foundations,” Unite.ai notes, highlighting some of the top open-source LLMs available to the AI community.

These include Llama 2, which is designed to fuel a range of state-of-the-art applications, and a collaboration between Meta and Microsoft has expanded the horizons for Llama 2.

Created through a year-long initiative, BLOOM is designed for autoregressive text generation, capable of extending a given text prompt. Its debut was a significant step in making generative AI technology more accessible.

MPT-7B, an acronym for MosaicML Pretrained Transformer, is a GPT-style, decoder-only transformer model. It boasts of several enhancements, including performance-optimized layer implementations and architectural changes that ensure greater training stability.

Falcon LLM, is a model that has swiftly ascended to the top of the LLM hierarchy. Notably, Falcon's architecture has demonstrated superior performance to GPT-3, achieving this feat with only 75% of the training compute budget and requiring significantly less compute during inference.

LMSYS ORG has made a significant mark in the realm of open-source LLMs with the introduction of Vicuna-13B. Preliminary evaluations, with GPT-4 acting as the judge, indicate that Vicuna-13B achieves more than 90% quality of renowned models like OpenAI ChatGPT and Google Bard.

Then, of course, there is OpenAI’s ChatGPT, possibly the most famous LLM. It immediately skyrocketed in popularity due to the fact that natural language is such a natural interface that has made the recent breakthroughs in Artificial Intelligence accessible to everyone.

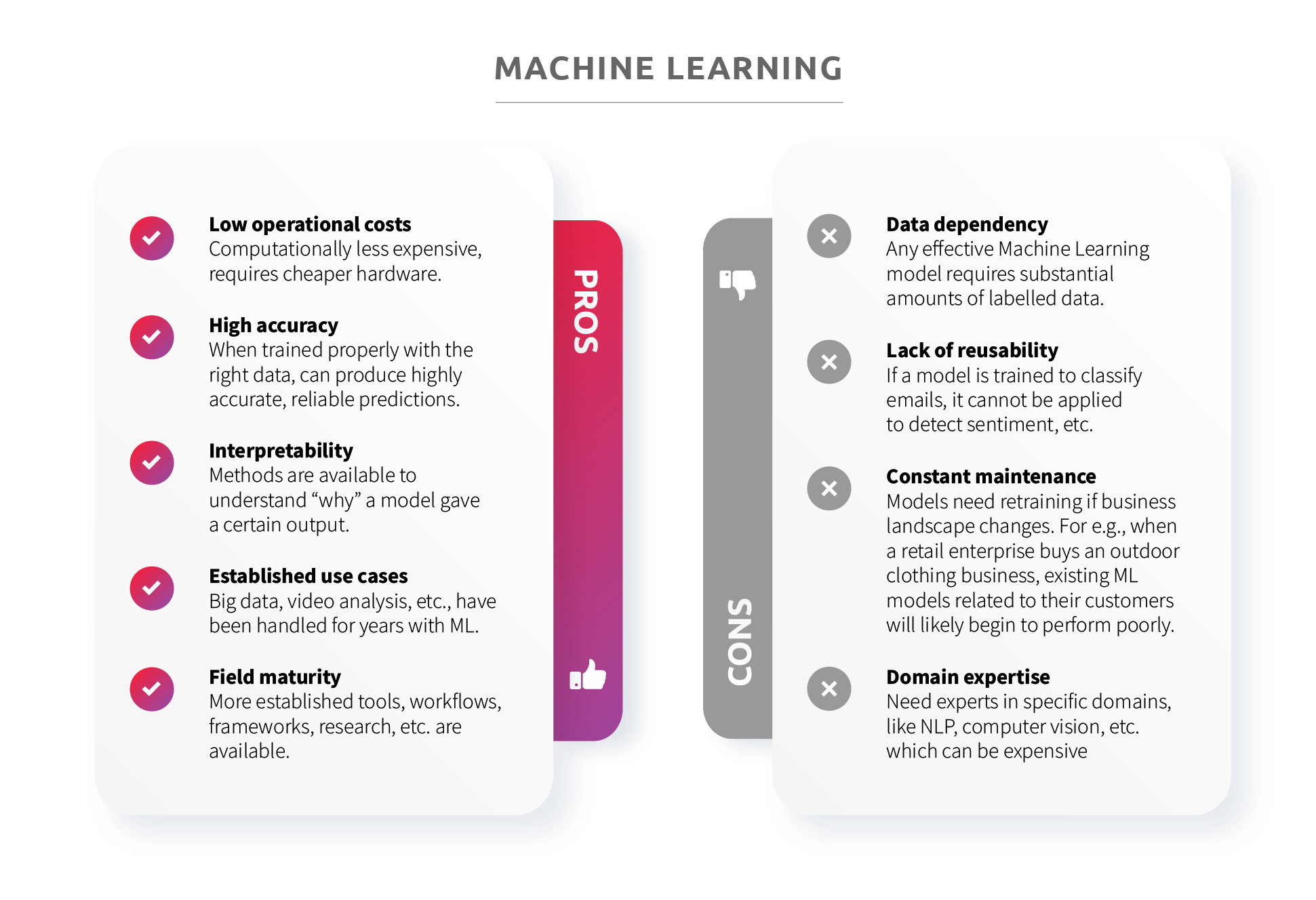

Machine Learning: Pros & Cons

Pros

- Operational Costs: Machine Learning models often prove cost-effective because they are computationally less expensive.

- Accuracy: When trained properly for specific use cases, the reliability and precision of established ML models make them indispensable for various industries.

- Interpretability: There are methods available to understand ‘why’ a model gave a certain output, aiding transparency.

- Wide Range of Use Cases: From data analysis to resource optimization, ML models have established use cases across industries.

- Mature Field Knowledge: Mature Field Knowledge: The ML field boasts a wealth of knowledge, providing a robust foundation for practical applications.

Cons

- Data Dependency: An effective Machine Learning model requires substantial amount of labelled data.

- Lack of Reusability: If a model is trained to classify emails, it cannot be applied to detect sentiment, etc.

- Complex Interpretation: Explaining ML model outputs to non-experts can be intricate, limiting accessibility.

- Constant maintenance: Models need retraining if business landscape changes. For e.g., when a retail enterprise buys an outdoor clothing business, existing ML models related to their customers will likely begin to perform poorly.

- Domain expertise: Need experts in specific domains like NLP, computer vision, etc., which can be expensive.

Why should businesses use Machine Learning?

LLMs (such as GPT-4), while they excel in natural language understanding and generation tasks, they are not designed to handle structured data in specific use cases, including clustering, image analysis, or ranking structured data tasks. Here, other machine learning models and algorithms like linear regression, logistic regression, gradient boosting machines (GBMs) like XGBoost, k-nearest neighbors (KNN) algorithm, and convolutional neural networks (CNNs) would be more appropriate.

In the end, the choice of model depends on the nature of data and the task in question, and it is important to select the appropriate model to yield the desired results.

Making informed decisions for enterprises

To navigate the choice between Generative AI and ML, businesses must evaluate their specific needs. Considerations such as data requirements, project goals, and long-term scalability should guide decision-making. A strategic approach to technology adoption ensures alignment with broader business objectives.

Large Language Models are expected to keep expanding in terms of the business applications they can handle, and continue to be trained on ever larger sets of data.

That data will increasingly be better filtered for accuracy and potential bias, partly through the addition of fact-checking capabilities.

“It's also likely that LLMs of the future will do a better job than the current generation when it comes to providing attribution and better explanations for how a given result was generated,” a TechTarget reports says.

Guided by the right partner, like Launchcode, enterprises can choose the AI model that best meets their needs and optimizes operations.

Interesting in adopting AI technologies to get a competitive advantage? Let’s talk.

Sources

https://www.unite.ai/best-open-source-llms/

https://www.techtarget.com/whatis/definition/large-language-model-LLM

https://www.mlopsaudits.com/blog/llms-vs-traditional-ml-algorithms-comparison

https://www.ibm.com/blog/open-source-large-language-models-benefits-risks-and-types/

https://www.linkedin.com/advice/0/what-pros-cons-using-deep-learning-vs-traditional

Share this

You May Also Like

These Related Stories

Embracing the Future: Why Your Business Should Not Fear AI

Building a future-ready enterprise: The journey to digital transformation